RoboGene: Boosting VLA Pre-training via Diversity-Driven Agentic Framework

An automated framework for generating diverse, physically plausible manipulation tasks to enhance Vision-Language-Action model pre-training

An automated framework for generating diverse, physically plausible manipulation tasks to enhance Vision-Language-Action model pre-training

The pursuit of general-purpose robotic manipulation is hindered by the scarcity of diverse, real-world interaction data. Unlike data collection from the web in vision or language, robotic data collection is an active process incurring prohibitive physical costs.

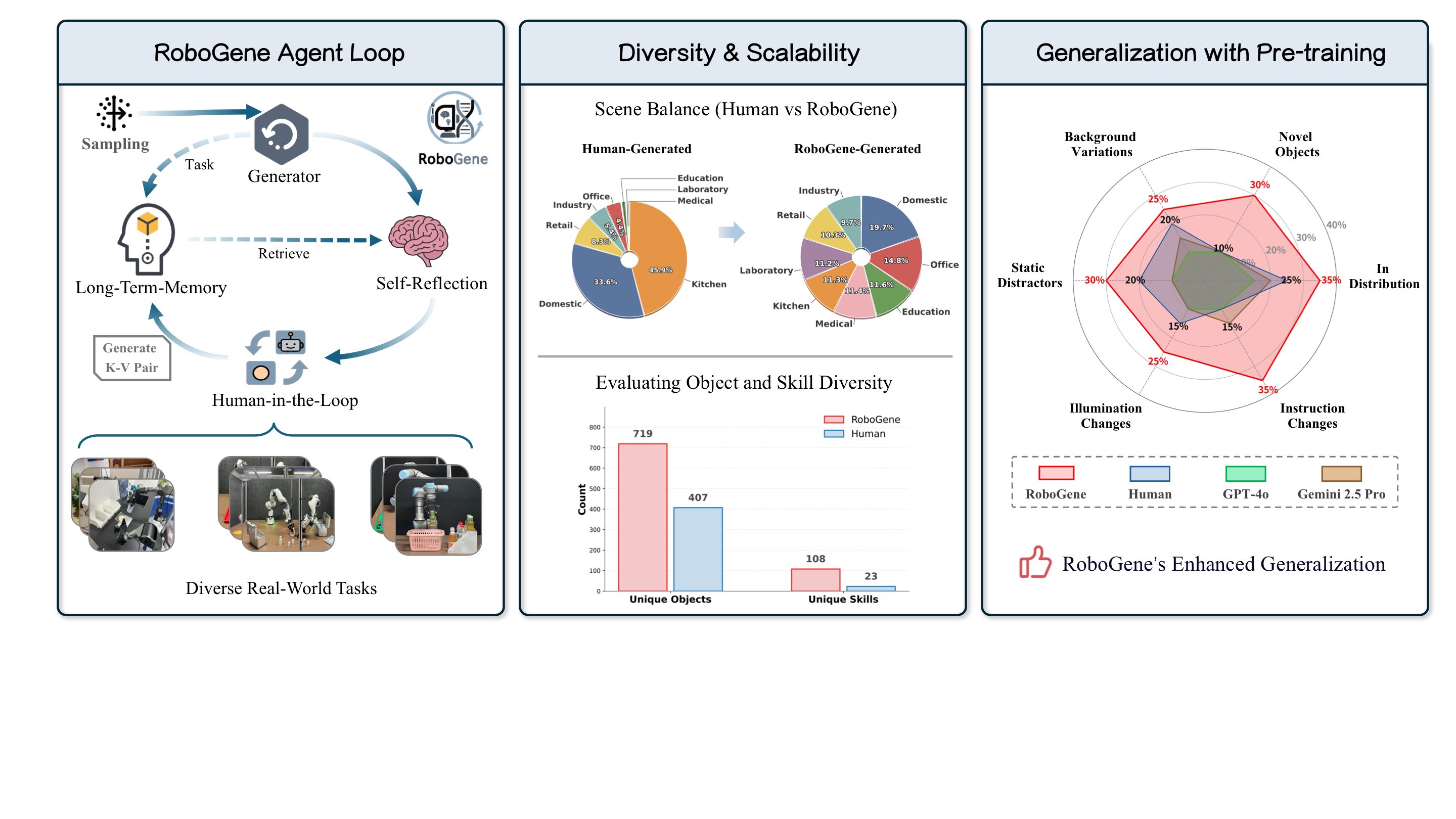

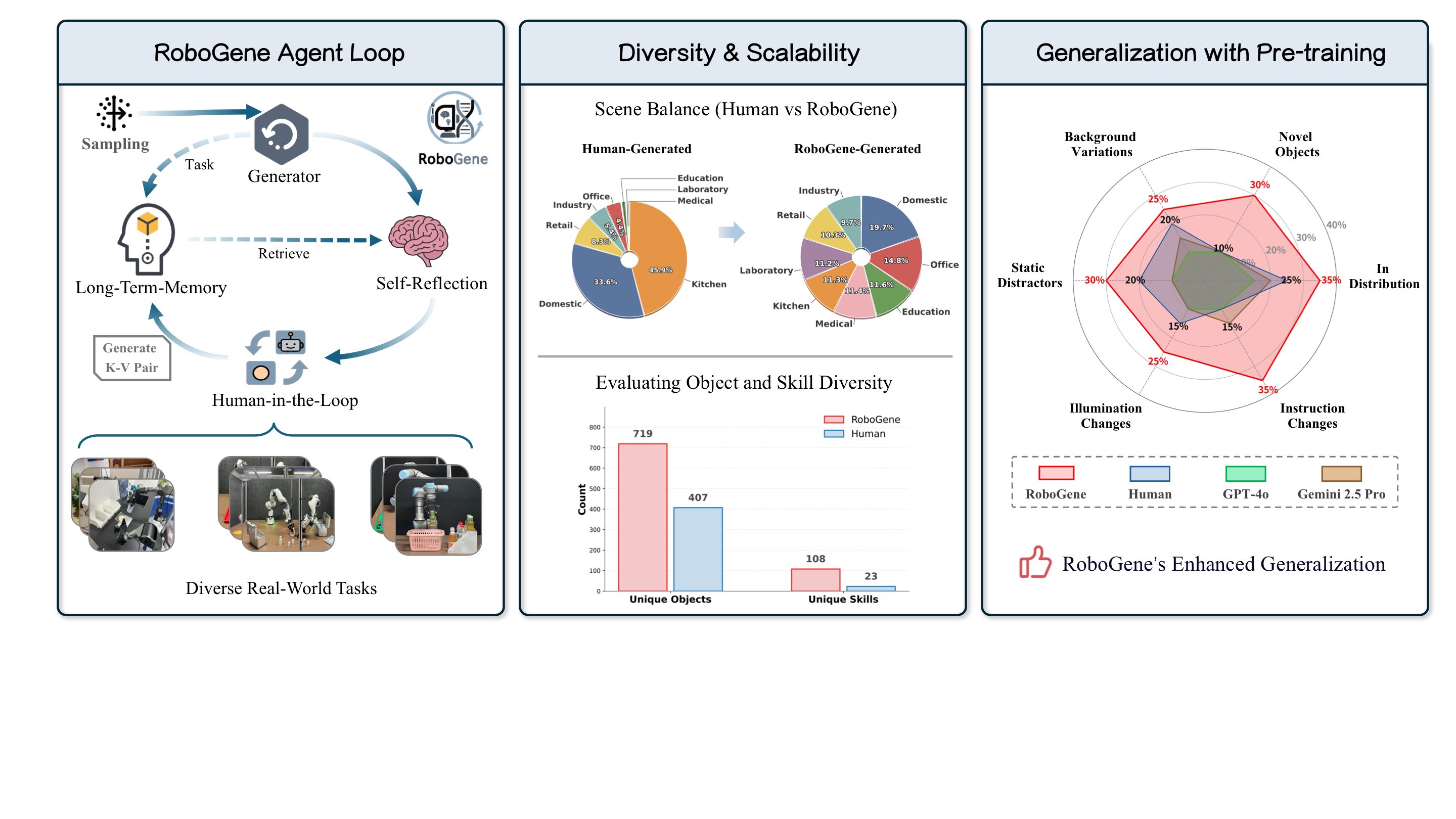

Existing manual methods are unscalable and biased toward common tasks. To address this, we introduce RoboGene, an agentic framework designed to automate the generation of diverse, physically plausible manipulation tasks across single-arm, dual-arm, and mobile robots.

RoboGene integrates three core components: diversity-driven sampling, self-reflection mechanisms, and human-in-the-loop refinement. We conduct datasets of 18k trajectories and introduce novel metrics to assess task quality and diversity.

Implements LFU strategy to explore task space comprehensively, ensuring coverage of edge cases often overlooked by manual curation.

Enforces physical constraints through simulation, filtering out infeasible trajectories and ensuring tasks are executable.

Continuous refinement through human feedback, enabling the system to adapt to specific robot embodiments.

Evaluated across multiple robot platforms including single-arm, dual-arm manipulators, and mobile manipulators.

Automated task generation across multiple embodiments including mobile manipulators, single-arm, and dual-arm systems.

Water Plant

Organize Desk

Assembling Crucible

Replenish Shelves

Sort Buttons

Pour Water

Handover Tape

Open Medical Box

Rinse Lettuce

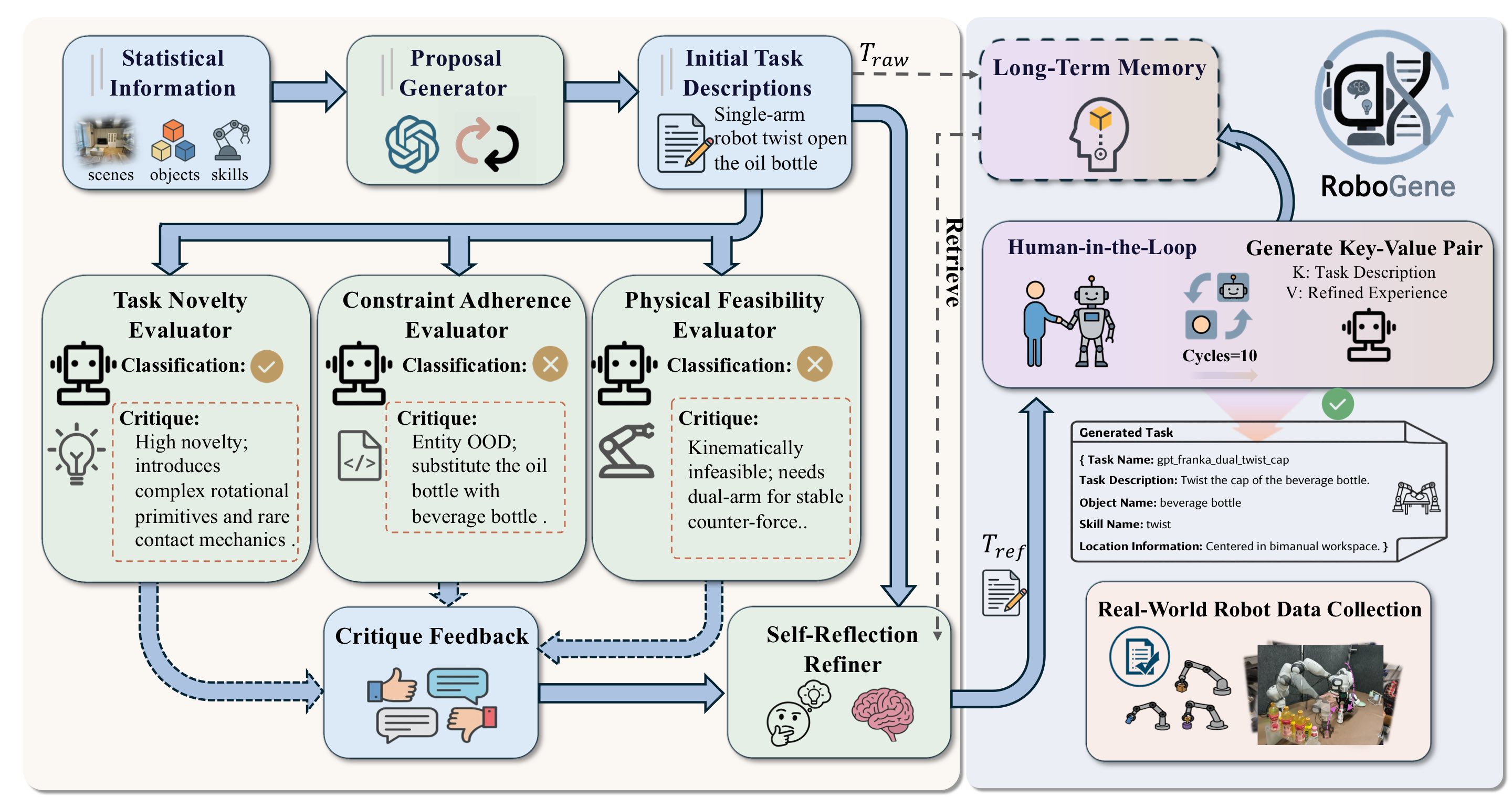

Quantitative assessment of pre-training efficacy and real-world execution demonstrations.

Grill Skewers

Weigh beakers

Build Blocks

Lubricate Gears

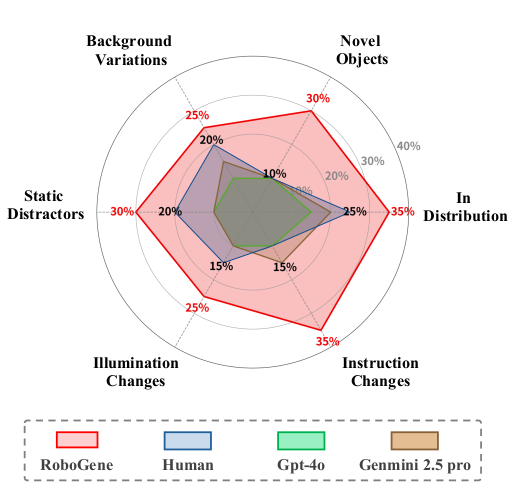

Novel Objects

Illumination Changes

Static Distractors

Background Variations

Instruction Changes

In-Distribution